Subjectify.us

Crowd-sourced subjective quality evaluation platform

What is it?

Subjectify.us is a web platform for conducting crowd-sourced subjective comparisons. The service is designed for the comparison of images, video, and sound processing methods. Apply your algorithm, its modifications, and competitive approaches to your test dataset—and upload the output to our server. We display results computed by different methods to paid participants pairwise. These participants are asked to choose the best method in each pair. We then convert pairwise comparison data to final scores and provide you with a detailed report.

Main use cases

Here’s how it works

What you do

You apply your algorithm and its competitors to your test dataset.

What we provide

We recruit study participants and present your data pairwise to them.

We process collected responses and generate detailed study report.

Main features

Pricing

Get estimate of your study budget.

Budget estimate $n/a Volume discount is applicable. Please contact [email protected] to get price estimate.Need some help?

Complete the form below or email us and get free support from study planning and dataset preparation to the final report analysis.

Case studies

In the posts below we show how Subjectify.us was used to conduct sample studies.

What people are saying about us

Subjectify.us could revolutionize subjective comparisons.

Subjective tests are time-consuming and expensive to produce, yet really are the gold standard. In this regard, Subjectify may be a great alternative for researchers and producers seeking to choose the best codec or best encoding parameters.

Streaming media producer and consultant

Our team is developing methods for generation of new views of given video. It is important for us to know how the end viewer feels about the synthesized videos or images from a perceptual perspective, so the subjective evaluation is crucial for us. We wanted each study participant to singly evaluate videos produced by various view generation methods. Subjectify.us platform is effective tool that satisfied our needs.

PhD student, Peking University

My research required me to choose pano processing method with the best visual quality. To conduct my previous subjective tests, I had to ask colleagues to take part in the tests as respondents. However, it was convenient neither for my colleagues (as it intervened their work), nor for me. Due to limited number of respondents, I was able to compare just two pano processing methods, but I wanted to compare more alternatives. Subjectify.us helped me to overcome all these issues. The platform took care about all technical aspects and collected all responses in less than 1 day. Subjectify.us collected an order of magnitude more responses than I was able to collect in my prior experiments. The platform enabled us to reliably confirm that our automatic pano processing method outperforms manual processing.

Researcher

Research powered by Subjectify.us

MSU Video Codec Comparison 2017

Part III: Full HD Content, Subjective Evaluation

7 video codecs under comparion.4 test vdeo sequences.

11,530 pairwise judgments from 325 participants.

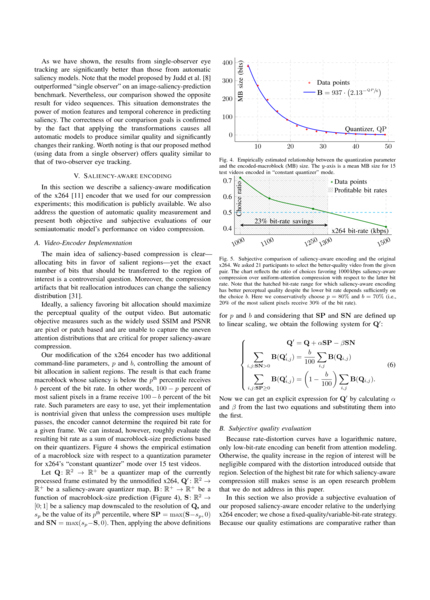

A semiautomatic saliency model and its application to video compression

International Conference on Intelligent Computer Communication and Processing 2017

346 participants.Saliency-aware video codec was compared with x264.

Perceptually Motivated Benchmark for Video Matting

2015 British Machine Vision Conference (BMVC)

442 participants.12 video and image matting algorithms were compared.

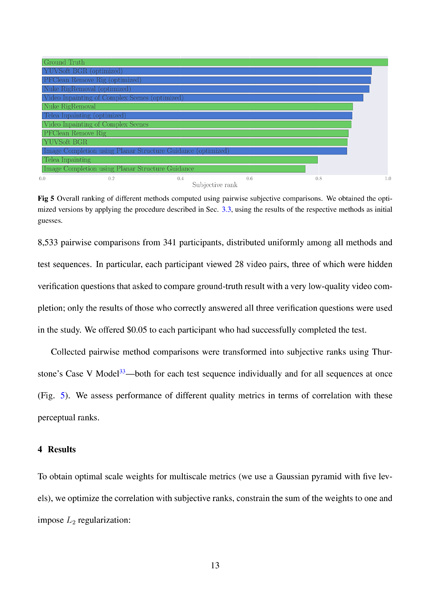

Toward an objective benchmark for video completion

Submitted to Signal, Image and Video Processing Journal

341 participants.13 video and image completion algorithms were compared.

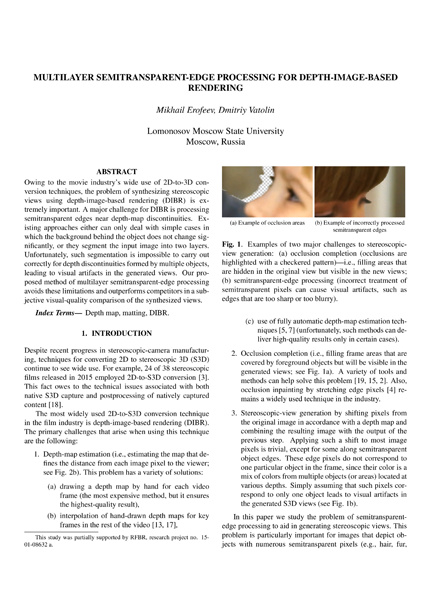

Multilayer semitransparent-edge processing for depth-image-based rendering

2016 International Conference on 3D Imaging (IC3D)

56 participants.3 depth-image-based rendering methods were compared.

Best Paper Award.

FAQ

Study participants view test images/videos with web browser running on their desktop or laptop computer at their home. We enforce fullscreen mode to ensure that all screen area is used for viewing content.

Subjectify.us presents images/videos for comparison to study participants in a pairwise fashion. During comparison project creation you can choose either parallel (side-by-side) or sequential display of images/videos from one pair. The participants are asked to choose the best option from each pair or indicate that both options have equal quality. The platform pays honorarium to study participants who successfully compared the certain number pairs.

Unfortunately, crowd-sourced studies don’t allow you to have fully controlled environment (e.g. control viewing distance). However, crowd-sourced studies enable you to get responses of significantly more participants than you can bring to your lab while saving time and money. Furthermore, during crowd-sourced studies you get results collected in a diverse set of real-world environments instead of results collected in one perfect (but possibly unrealistic) laboratory environment.

To ensure that respondents are making thoughtful selections, we ask them to compare quality-control pairs alongside regular pairs. Each quality-control pair consists of samples with significant quality difference. If participant fails to choose a sample with higher quality, his/her responses are discarded.

To generate quality-control pairs ensuring that participants give thoughtful answers, Subjectify.us uses verification questions that you define during comparison project creation. To create verification question, you need to choose two image/video samples in your comparison that have significant quality difference. For example, if you are comparing video codecs you can choose video compressed at 1Mbps bitrate and the same video compressed at 5 Mbps bitrate. Set higher quality option as a “Leader” and lower quality option as a “Follower”. We recommend creating at least two verification questions.

Please contact [email protected] if you need help with verification question definition.

We have shown that results of study conducted with Subjectify.us are close to results of traditional MOS study obtained in laboratory. For this purpose we used Subjectify.us to replicate the user-study conducted by third party in well-controlled laboratory environment and then compared the results of both studies. Furthermore, we have shown that results obtained with Subjectify.us have significantly higher correlation with laboratory results than scores computed using objective metrics.

The detailed description of this experiment is available in our blog.

To convert pairwise judgments given by study participants to final subjective scores, we use Bradley-Terry model. The Bradley-Terry model assumes that probability of choosing option A with score

We choose subjective score values with maximum likelihood given collected pairwise judgments.

The Subjectidy.us scores should be interpreted in a “higher is better” way. More formally, given that alternative A has score

Please note, that due to relative nature of pairwise comparisons, you should not rely on score absolute values. The Subjectify.us scores have meaning only in comparison with other scores from the same test-case.

You can export collected responses in CSV format by clicking briefcase icon in the study report toolbar.

We charge $2 for each study participation. During one participation the respondent compares either 20 image pairs or 10 video pairs (this number can be adjusted depending on videos duration). The amount of pairwise judgments required for your project depends on the number of alternatives to compare and the quality gap between them. We give 50% discount to clients from non-profit / educational institutions.

We always negotiate the final price with you before responses collection is started. You can get estimate of your study budget using a calculator on our website.

You are welcome to share study results with anyone or include it in your scientific paper. We also support embedding of our reports to the third party websites (please contact [email protected], if you need help with embedding)

To ensure that your files can be viewed by study participants in the web browser, we apply preprocessing to all files you upload to Subjectify.us.

- The images are losslessly converted to PNG format.

- The videos are transcoded to H.264 streams with --crf 16 to have no perceivable distortions introduced at transcoding stage. We can adjust crf value or even disable preprocessing for your project upon request, please contact [email protected].

Subscribe to our newsletter

Stay up to date with Subjectify.us new feature and case studies.